Monday, August 10, 2020

Why run for President in Battlestar Galactica?

Let's say that it's in the first half of the game, before the sleeper agent phase. It's a 5-player game and you think that everyone is human. Someone else is President. Why would you want to spend the action and cards necessary to make yourself President instead? Because one of you might become a Cylon.

Let's say all players are going to get one more loyalty card. So there are five cards, two of which are Cylon cards. The probability that one (or more) of you turns Cylon is $1 - P(\text{neither Cylon})$. $P(\text{neither Cylon}) = \frac{3}{5}\cdot\frac{2}{4}$. That is, one of you gets a human card ($3/5$), and then the other also gets one of the remaining human cards ($2/4$). Thus,

\begin{align}

P(\text{one or more of you become Cylon}) &= 1 - \frac{3}{5}\cdot\frac{2}{4} \\

&= 1 - \frac{3}{10} \\

&= 0.7.

\end{align}

Don't forget about the case where both of you end up as Cylons. Then resources are "wasted", and maybe you look suspicious. But maybe that's okay, because it's a good cover for depleted resources and allows you to stay hidden. But let's take this out, just assume that it's not valuable to switch here.

\begin{align}

\begin{split}

P(\text{one of you become Cylon}) &= P(\text{one or more of you become Cylon}) \\

& \phantom{= } - P(\text{both of you become Cylon})

\end{split}\\

\end{align}

\begin{align}

P(\text{one of you become Cylon}) &= 0.7 - \frac{2}{5}\cdot\frac{1}{4} \\

&= 0.7 - 0.1 \\

&= 0.6

\end{align}

Thus, it is more likely than not that you and the current President are going to end up on opposite teams, so you'd be better off having more power. The remaining question is whether or not you think the benefit is worth the cost.

Let's assign this a shorter variable, $p_1$, for use later on.

\begin{align}

p_0 &= P(\text{one of you become Cylon}) \\

&= 0.6.

\end{align}

I'll be honest in that I used to think it was inefficient to squabble about the Presidency unless you knew the President was a Cylon. But after a forum post about the importance of control in the game got me thinking about this case. It's interesting to me how the game is structured in this way that encourages loyal humans to do selfish things even without explicit personal goals (a la Dead of Winter). This is an interesting emergent property of the rules.

We've considered the case when there aren't any Cylons out, now let's look at having one Cylon out before the sleeper agent phase. There are three sub-cases: you're the Cylon, the President is the Cylon, someone else is the Cylon. We've already tacitly assumed above that if you or the President is or becomes a Cylon, it's worth it to take the Presidency. So those cases are taken care of. If someone else is a Cylon, then we're similar to the above analysis, but with a smaller probability that one of you becomes a Cylon in the sleeper agent phase. In this case there is only one Cylon card out of 4 left.

\begin{align}

P(\text{one of you become a Cylon}) &= 1 - P(\text{both of you stay human}) \\

&= 1 - \frac{4}{5}\cdot\frac{3}{4}\\

&= 1 - \frac{3}{4} \\

&= \frac{1}{4} = 0.25

\end{align}

Here the probability of one of you becoming a Cylon is much lower. One interesting case to consider here is if the existing Cylon gets the second Cylon loyalty card. In this case, the Cylon can then give this card to one of the humans. This is another opportunity for you or the current President to become a Cylon, and thus on opposite teams. Assuming this happens, and not knowing anything about the Cylon's strategy in selecting a target, there is a probability of $2/4 = 0.5$ that the Cylon selects you or the President out of the four humans. However, the President is arguably a higher-value target because of the powers of the office. For the rest of the analysis, we'll assume that a Cylon with two loyalty cards does give the second card away, with equal probability to all players. This increases the probability that one of you becomes a Cylon, $\Delta p = \Delta P(\text{one of you becomes a Cylon})$, is as follows.

\begin{align}

\Delta p &= P(\text{Cylon with two cards}) \cdot P(\text{Cylon gives card to one of you}) \\

&= \frac{1}{5} \cdot \frac{2}{4} \\

&= \frac{1}{10} = 0.1

\end{align}

Thus, our corrected probability for one of you becoming a Cylon is,

\begin{align}

P(\text{one of you become a Cylon}) &= 0.25 + 0.1 \\

&= 0.35.

\end{align}

As before, let's assign this a shorter variable, $p_1$, for use later on.

\begin{align}

p_1 &= P(\text{one of you become a Cylon}) \\

&= 0.35.

\end{align}

Now, let's consider the case when two Cylons are out, which I'd argue doesn't depend on when in the game it occurs. (I choose my words carefully here. I would argue this if pressed, but I'm not going to because I'm lazy.) There are four sub-cases: you are a Cylon, the President is a Cylon, both of you are Cylons, neither of you are Cylons. In the first two cases, it makes sense to get the Presidency, as you and the President are on opposite teams. If neither of you are Cylons, it doesn't make sense (unless the President isn't using the office well). If both of you are Cylons, I'd say in general it doesn't make sense, unless the President is already suspected and you can keep the office on your team by taking it.

What is the confidence (i.e. assessed probability) you must have that a third party is a Cylon to make it not worth while to go for the presidency? As a break-even point we could assign a probability of 0.5. A better approach would be to have values assigned for the benefit of taking the presidency from the opposing team as well as the cost of moving the presidency. Determining those values is beyond the scope of this analysis, so we'll stick with a probability threshold of 0.5, meaning we're looking for the point at which it's more likely than not that you have incentive to take the presidency. This critical point occurs according the following equation.

\begin{align}

0.5 &= p_0 \cdot P(\text{no Cylons}) + p_1 \cdot P(\text{one Cylon}) + 0 \cdot P(\text{two Cylons})\\

&= 0.6 \cdot P(\text{no Cylons}) + 0.35 \cdot P(\text{one Cylon})

\end{align}

I included the two Cylon case to point out that the no and one Cylon cases are not the only possibilities. There's no $1-p$ type tricks here in general. Now, if we do assume that there is at most one other Cylon, then we condition becomes easier to conceptualize. Then it simplifies as follows.

\begin{align}

0.5 &= 0.6 \cdot (1 - P(\text{one Cylon})) + 0.35 \cdot P(\text{one Cylon}) \\

&= 0.6 + (-0.6 + 0.35) \cdot P(\text{one Cylon}) \\

&= 0.6 + -0.25 \cdot P(\text{one Cylon}) \\

P(\text{one Cylon}) &= \frac{0.6-0.5}{0.25} \\

&= 0.4

\end{align}

Here, as long as we believe the probability of one Cylon is 0.4 or less (and the probability of two is zero), then we're more likely than not to end up and opposite teams as the President, even though we are both presently human, and thus have reason to take it.

As a reference it may be useful to know the probability of each of those cases (and the previous cases we've considered). If you have a human card, and you're sure that the President is also human, you know there are two Cylon cards and six human cards left. Three of those cards have been dealt, while five remain for later.

\begin{align}

P(\text{all humans}) &= \frac{6}{8} \cdot \frac{5}{7} \cdot \frac{4}{6} \approx 0.357 \\

P(\text{one cylon}) &= \frac{6}{8} \cdot \frac{5}{7} \cdot \frac{2}{6} \cdot\underbrace{3}_{{\text{3 ways to assign Cylon}}} \approx 0.536 \\

P(\text{two cylons}) &= \frac{6}{8} \cdot \frac{2}{7} \cdot \frac{1}{6} \cdot (\text{3 choose 2 ways to assign cylons) }\\

& = \frac{6}{8} \cdot \frac{2}{7} \cdot \frac{1}{6} \cdot \underbrace{3}_{= \frac{3!}{2! \cdot 1!}} \approx 0.107

\end{align}

Check, does that add up to one? Yes. We can combine this to find the probability that you and the President will end up on opposite teams, given that you start off as human (without any assumptions about the other players.

\begin{align}

p &= p_0 \cdot P(\text{no Cylons}) + p_1 \cdot P(\text{one Cylon}) + 0 \cdot P(\text{two Cylons})\\

&\approx 0.6 \cdot 0.357 + 0.35 \cdot 0.536 + 0 \cdot 0.107 \\

p&\approx0.402

\end{align}

Thus, without any information pertaining to the loyalty of the other players, you're more likely than not to stay on the same team as the President. However, perhaps counter-intuitively, if you feel you can trust everyone, you're more incentivized to go for the presidency yourself.

And what if you're not certain that the President is human? What if all you know is that you're human. Given that, there's a probability of $P(HH|H) = 7/9$ that the President is human with a probability of $P(CH|H) = 7/9$ that is a Cylon with probability $2/9$. Here $P(HH|H)$ refers to the probability that both the President and you are human given that you are human. Similarly, $P(CH|H)$ is the probability that the President is Cylon (now) and you are human (now) given that you are human (now). We can express the probability that you and the President end up on opposite teams given that you are human, $P(O|H)$ as follows. Here $O$ refers to the event that you and the President end up on opposite teams. We're still focusing on opposite teams, and ignoring possible benefits to the Cylons if resources are expended moving the presidency.

\begin{align}

P(O | H) = P(O | HH) \cdot P(HH | H) + P(O | CH) \cdot P(CH | H)

\end{align}

We previously computed $P(O|HH)$, which is the $p$ immediately above.

Now let's find $P(O|CH)$, the probability that you and the President end up on opposite teams given that the President is a Cylon before the sleeper agent phase while you are human. There are similar cases to before. There are two ways that the two of you can remain on opposite teams. First, another player can receive the remaining Cylon card. Since there are five players and five loyalty card, only one of which is Cylon, everyone has the same probability of receiving the Cylon card. Thus, another player receives the Cylon card with probability $3/5$. Second, the President can receive the Cylon card (probability $1/5$) and give it to another player aside from you. Assuming the President gives it out randomly, the probability that this happens and you remain human is $\frac{1}{5}\cdot\frac{3}{4}$.

\begin{align}

P(O|CH) &= \frac{3}{5} + \frac{1}{5}\cdot\frac{3}{4} \\

&=\frac{12+3}{20}\\

&=0.75

\end{align}

Pulling everything together, we get the following.

\begin{align}

P(O | H) &\approx 0.402 \cdot \frac{7}{9} + 0.75 \cdot \frac{2}{9}\\

&\approx 0.479

\end{align}

This is much closer to 0.5, but still less. So given that you're human before the sleeper agent phase, you're just slightly more likely than not to end up on the same side as the president, given all of the assumptions that we've made. However, once you get some information about how the other people are playing, you can throw out most of the probabilities regarding the current state of the game, and only pay attention to those that affect the future of the game. Individual behavior can be much more revealing than statistics.

Monday, August 3, 2020

Is it possible an event is never drawn?

A deck of cards with values in a 2d3 distribution (so a single card with a value of 2, two value 3 cards, etc) and a 10th reshuffle card. On a reshuffle card, draw a replacement card, until you get a value (ie not the reshuffle) card. I was going to add another card that has a specific in-game event, and was trying to work out how likely it was that card would appear before the game ends (about 50 draws, not tied to the cards): I think it is possible, albeit unlikely, that it may not appear to all (although the longer the game goes on, the less likely that is, from my understanding of probabilities).

—paraphrased from here on BGG

This can be solved with a Markov chain, which is a way to model sequences of probabilistic events, where the probabilities involved can be expressed in terms of the state after the previous step in the sequence. In this case, the state represents what's still in the deck. We'll have a different state for each possible number of cards remaining in the deck 11 (only possible before first turn), 10, 9, ..., and 1. The last we'll use as a special state, primarily not to denote when one card is left, but the fact that an event has been drawn. For simplicity, I'll not keep track of how many events have occurred (although we could expand to include that).

So we need a vector representing the probabilities of the initial state, which is known to be 11 cards, so the state vector, $\mathbf{s}$.

\begin{align} \mathbf{s} = \begin{bmatrix} 1\\ 0\\ 0\\ 0\\ 0\\ 0\\ 0\\ 0\\ 0\\ 0\\ 0 \end{bmatrix} \end{align}Here each subsequent entry in the vector represents a different state, and the values are the probabilities of being in each state.

We also need the transition matrix. That is, given a state, what are the probabilities of moving to another state. Consider when there are $c$ cards left. There are three or four relevant possibilities, depending on how you count.

- You could draw a number card, leaving $c-1$ cards, which occurs with probability $(c-2)/c$.

- You could draw a shuffle card (probability $1/c$, and then draw a number card (probability $9/10$, as we can ignore drawing the shuffle card again), leaving 10 cards with probability $1/c \cdot 9/10$.

- You could draw an event card, with probability $1/c$, and end up in the special event state (by our reckoning).

- You could draw a shuffle card (probability $1/c$) and then draw the event card (probability $1/10$), and end up in the special event state with probability $1/c \cdot 1/10$.

We can combine 3 & 4 to find the total probability of getting an event, which is $1/c + 1/c\cdot1/10 = 1/c \cdot 11/10$.

For the first round the probabilities are a little bit special, as we have 11 cards. Thus, the shuffle card doesn't do anything but delay the game. The two possible outcomes are draw a number card (probability $9/10$) and draw an event card (probability 1/10).

Putting this together, we have the transition matrix, $\mathbf{P}$. Each column represents the initial state, and each row represents the next state, in both cases starting with 11 remaining cards, descending to 2, and ending with the special event state.

\begin{align} \mathbf{P}& = \begin{bmatrix} 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ \frac{9}{10} & \frac{9}{10}\cdot\frac{1}{10} & \frac{9}{10}\cdot\frac{1}{9} & \frac{9}{10}\cdot\frac{1}{8} & \frac{9}{10}\cdot\frac{1}{7} & \frac{9}{10}\cdot\frac{1}{6} & \frac{9}{10}\cdot\frac{1}{5} & \frac{9}{10}\cdot\frac{1}{4} & \frac{9}{10}\cdot\frac{1}{3} & \frac{9}{10}\cdot\frac{1}{2} & 0 \\ 0 & \frac{8}{10} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & \frac{7}{9} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & \frac{6}{8} & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & \frac{5}{7} & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & \frac{4}{6} & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & 0 & \frac{3}{5} & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & \frac{2}{4} & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & \frac{1}{3} & 0 & 0 \\ \frac{1}{10} & \frac{11}{10}\cdot\frac{1}{10} & \frac{11}{10}\cdot\frac{1}{9} & \frac{11}{10}\cdot\frac{1}{8} & \frac{11}{10}\cdot\frac{1}{7} & \frac{11}{10}\cdot\frac{1}{6} & \frac{11}{10}\cdot\frac{1}{5} & \frac{11}{10}\cdot\frac{1}{4} & \frac{11}{10}\cdot\frac{1}{3} & \frac{11}{10}\cdot\frac{1}{2} & 1 \\ \end{bmatrix} \end{align}

Note a check that we can do is that each column must sum to 1. That is, the probability of transitioning from one state to one of the states is 1. This is because all possible states are incorporated in the matrix.

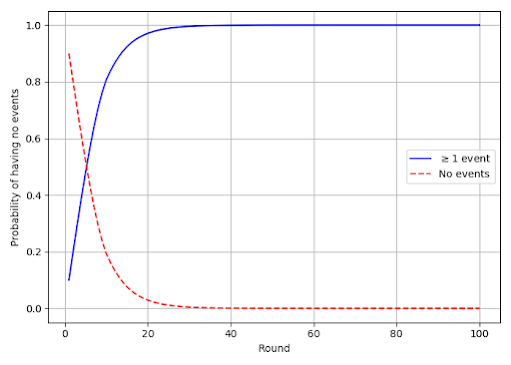

To find the probability of each state in round $r$, we just compute $P^r \cdot s$. I've done this for 1–100 rounds below. It's quite rare for a game of 50 rounds to have no events. Also note that after about 10 rounds we seem to have reached a stable condition, where the probabilities of being in each of the states isn't changing much (relative to total probability of not having had an event yet).

|

| Figure 1: Probabilities of having some and no events |

|

| Figure 2: Probabilities of having no events, log scale |

We can expand this solution to not only look at the probability of whether or not an event has occurred, but instead to look at the probability of an event in any given round. The most straightforward application of the previous method would be to (nearly) multiply the number of states by the number of events possible (i.e. the number of rounds). This keeps track of however many events that we may be interested in, just as we've kept track of the binary state of whether any events have occurred. However, this is very inefficient and quickly leads to very large matrices. Instead, we can take note of the fact that if we look at the current round, the only thing that affects whether we draw an event is whether it is still in the deck, not how many times we've drawn an event previously. Thus, we can merely (roughly) double the number of states, with about half corresponding to the cases where the event card is in still in the deck, and the rest corresponding to the cases where the event card has already been drawn. The first state is again one with 11 cards left, which is equivalent to the case when there is 1 card left. If one card is left, it must be the reshuffle card, which, when drawn at the beginning of the next round, then leaves 11 cards just as at the start of the game. The next 9 states are when 10–2 cards remain in the deck, including the event card. The final 9 states are when 10–2 cards are left in the deck, but the event card is not in the deck.

The probabilities are much as before, except that we now broken up cases 3 & 4 from above, and we consider the transitions between the various number of possible cards left in the deck without the event card. The probability of decreasing by one card in the deck, both without the event card, is the probability of not drawing the reshuffle card, $(c-1)/c$. Also note that when there are two cards left there's a probability of $0.5$ that the reshuffle card remains. This is equivalent to the the initial state of having 11 cards, as previously mentioned, as is why the probability of moving from the two 2-card states to the 11-card state is $1/2$.

From any of these states, we can compute the probability of drawing a card. This actually is equal to the sum of elements of the matrix we just constructed. We need to sum the elements which correspond to moving from a state with the event card in the deck to one without it. This includes the cases when the reshuffle card is drawn. To this we must add the cases when the event card is initially out of the deck, the reshuffle card is drawn, and finally the event card is drawn. That is, the 11th row all counts. We must also consider the $1/2$ probability that we go from 2 cards with event to 11 cards left, as this really represents drawing the event card and leaving only the reshuffle card in the deck. Using this we can construct a matrix, $\mathbf{G}$, with all of these probabilities are the elements, such that when we multiply it by any probabilistic state vector, we get the probability of drawing an event card from that state.

Using these three we can find the probability of drawing an event in any round.

\begin{align} P(\text{event in round } r) = G P^r s \end{align}The result of this equation is plotted in Fig. 3. We see a perhaps somewhat unexpectedly consistent result that appears to be exactly equal to 0.1. But is it? Does this continue forever? We can certainly easily compute the probability for any practical number of rounds, and any deviation from exactly 0.1 in our computations appears to be due to computer rounding error.

|

| Figure 3: Probabilities of drawing an event in current round |

So let's prove it. First, we'll quickly dispense with the simple case when there are 11 (or 1) cards left in the deck. Here we must end by drawing a non-reshuffle card, of which there are ten with only one being an event. Thus the probability of drawing an event given that there are 11 cards left is $1/10$.

\begin{align} P(\text{event} | C = 11) = 0.1 \end{align}Note a slight change in notation here. After writing the above equation, it became obvious that the number of cards left is really a random variable, and hence the switch to a capital $C$. The rest of the cases rely on some observations about the relationship between having an event card in the deck and not when there are given number of cards, $c$, left in the deck. That is, given $C$, what is the probability that the event card is still in the deck?

\begin{align} P(\text{event in deck} | C = c) = ? \end{align}Let's consider how we get to having $c$ cards left at the beginning of a round. For this to happen, in the rounds immediately preceding the $11-c$ cards not in the deck must have been drawn. What is the probability of doing that while leaving the event card in the deck? Keep in mind that this is conditioned on not drawing the re-shuffle card in any of these rounds.

\begin{align} P(\text{draw value} | \text{don't shuffle}, C=c) = \frac{c-2}{c-1} \end{align}We need to multiply this term depending on the number of cards left.

\begin{align} P(\text{event in deck} | C = c) &= \prod_{i=11}^{c-1} \frac{i-2}{i-1} \\ \require{cancel}&= \frac{\cancel{9}}{10} \cdot \frac{\cancel{8}}{\cancel{9}} \cdot \frac{\cancel{7}}{\cancel{8}}\cdots \frac{\cancel{c}}{\cancel{c+1}} \frac{c-1}{\cancel{c}} \\ &= \frac{c-1}{10} \end{align}The probability of drawing an event depends on whether or not the event card is still in the deck. These probabilities largely follow from our initial analysis.

\begin{align} P(\text{event} | \text{event in deck}, C = c) &= \underbrace{\frac{1}{c}}_{{\text{draw event directly}}} + \underbrace{\frac{1}{c}\cdot\frac{1}{10}}_{{\text{draw reshuffle then event}}} \\ P(\text{event} | \text{event not in deck}, C = c) &= \underbrace{\frac{1}{c}\cdot\frac{1}{10}}_{{\text{draw reshuffle then event}}} \end{align}When the event is not in the deck, the only way to draw it again is to first draw the reshuffle. Putting these together we can find the following.

\begin{multline} P(\text{event in deck} ) = P(\text{event} | \text{event in deck}) \cdot P(\text{event in deck}) \\+ P(\text{event} | \text{event not in deck}) \cdot \left ( 1 - P(\text{event in deck} ) \right ) \end{multline}In our case (conditioning on $C=c$), we can find what we're looking for.

\begin{align} P(\text{event in deck} | C = c) &= \left (\frac{1}{c} + \frac{1}{c}\cdot\frac{1}{10} \right ) \cdot \frac{c-1}{10} + \frac{1}{c}\cdot\frac{1}{10} \cdot \left ( 1 - \frac{c-1}{10} \right ) \\ &= \frac{1}{c} \cdot \frac{11}{10} \cdot \frac{c-1}{10} + \frac{1}{c} \cdot \frac{1}{10} \cdot \frac{11-c}{10} \\ \require{cancel}&= \frac{11\cancel{c}}{100\cancel{c}} - \cancel{\frac{11}{100c}} + \cancel{\frac{11}{100c}} - \frac{\cancel{c}}{100\cancel{c}} \\ &=\frac{11}{100} - \frac{1}{100} \\ &= \frac{10}{100} \\ &= \frac{1}{10} \end{align}Here we see that, indeed, regardless of how many cards there are in the deck, the probability of drawing an event always works out to be exactly 0.1. Here two effects exactly cancel each other out. On the one hand, when there are fewer cards, it's more likely to draw an event card that is still in the deck. On the other hand, with fewer cards it's less likely that there the event card still is in the deck.

There is yet a simpler way to see that the probability of drawing an event every round is 0.1. Consider the symmetry of the situation. Every round ends with a non-reshuffle card being drawn. There is no difference in the 10 non-reshuffle cards that impacts the probability of them being drawn. Therefore, the probability that each is drawn in any round must be 0.1. You may ask whether drawing the event card affects the probability of it being drawn in a subsequent round. It does; that effect shows up in certain conditional probabilities.